In two previous articles, you saw how new behavior of table variables in SQL Server 2019 can improve execution plans. This article demonstrates another aspect of this behavior, which is by design neither good, nor bad. Depending on data and execution context, it could end up as an improvement, but it can also degrade performance and introduce new problems.

Consider the following stored procedure that deal with a query similar to the one in the previous article. It accepts a single input parameter for the product color and has a table variable that collects product ids for that color, while the second statement returns order details for those products:

CREATE OR ALTER PROCEDURE dbo.TVDeferred_Demo

@Color NVARCHAR(15)

AS

BEGIN

DECLARE @T AS TABLE(ProductID INT PRIMARY KEY);

INSERT INTO @T SELECT ProductID FROM Production.Product WHERE Color = @Color;

SELECT * FROM @T t

INNER JOIN Sales.SalesOrderDetail od on t.ProductID = od.ProductID

INNER JOIN Sales.SalesOrderHeader h on h.SalesOrderID = od.SalesOrderID

ORDER BY od.UnitPrice DESC;

END

Again, we will execute this procedure under both compatibility levels 140 and 150. Let’s ensure that the database is in the SQL Server 2017 related mode and let’s invoke the above created procedure for the first time with the parameter ‘White’:

ALTER DATABASE AdventureWorks2019 SET COMPATIBILITY_LEVEL = 140;

GO

EXEC dbo.TVDeferred_Demo N'White';

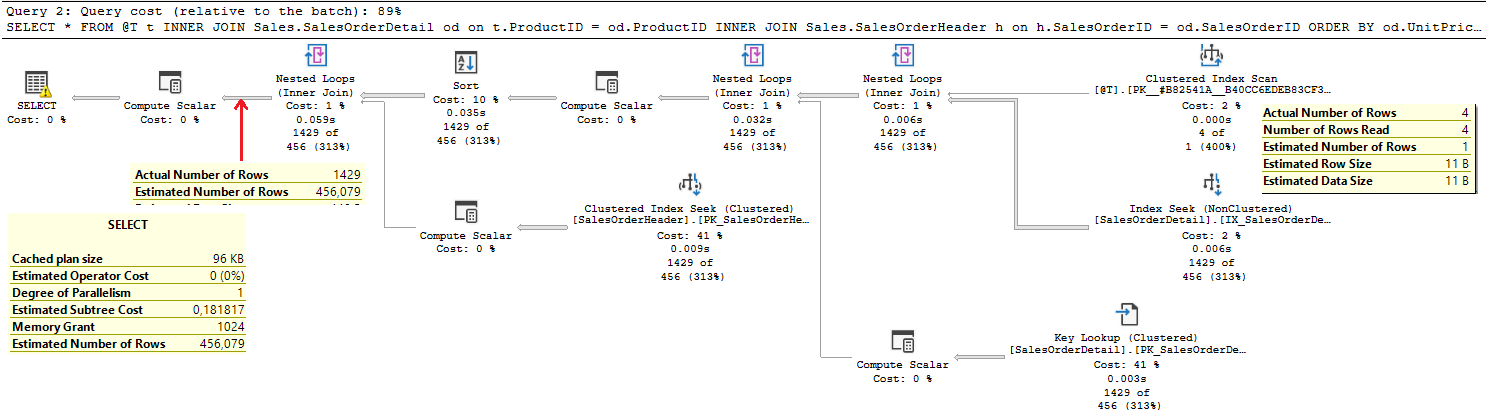

Here is the execution plan for the second query in the stored procedure, with some extracted execution parameters:

As you can see, this is a Nested Loop Join based plan, which is usual, when the processing starts with a table variable, since its cardinality prior to SQL Server 2019 is fixed to 1. You can also see that all values are underestimated, which leads to a memory grant of only 1 MB.

When you call the same procedure with another parameter, it will reuse the plan from cache. What would be the plan, if we would call the procedure with a parameter which is not so high selective? Let’s ensure that there is no plan in cache, and call the same procedure with the parameter ‘Black’:

DBCC FREEPROCCACHE;

GO

EXEC dbo.TVDeferred_Demo N'Black';

When you observe the execution plan carefully, you will see exactly the same plan. Although, there are 93 products with the black color, all estimations and plan decisions remain the same, because the cardinality of the input table variable did not and could not be changed – it was still 1.

Since the actual number of rows is significantly greater than in the call with the parameter ‘White’, you can see here sort warnings because this time 1MB of memory grant was not sufficient for sorting. But, the execution plan is exactly the same as for the first call.

Prior to SQL Server 2019, the execution plan for the second query in this stored procedure was always the same, regardless of parameter used for the first invocation and thus plan generation. Since the table variable has cardinality of 1, all estimations and the execution plan will be the same. We can say, using a table variable in this stored procedure and passing it to the second query neutralizes parameter sniffing effect. That does not mean, this is good or bad for all executions (you saw sort warnings and they are always bad), but the plan was stable, it did not change even after failover or clearing cache. If you call this stored procedure usually with high selective parameters, you can consider this plan as a good plan.

In SQL Server 2019, since table variable could have different cardinality, this stored procedure is prone to parameter sniffing and depending on the first execution parameter, you can have different execution plans:

ALTER DATABASE AdventureWorks2019 SET COMPATIBILITY_LEVEL = 150;

GO

EXEC dbo.TVDeferred_Demo N'White';

GO

DBCC FREEPROCCACHE;

GO

EXEC dbo.TVDeferred_Demo N'Black';

GO

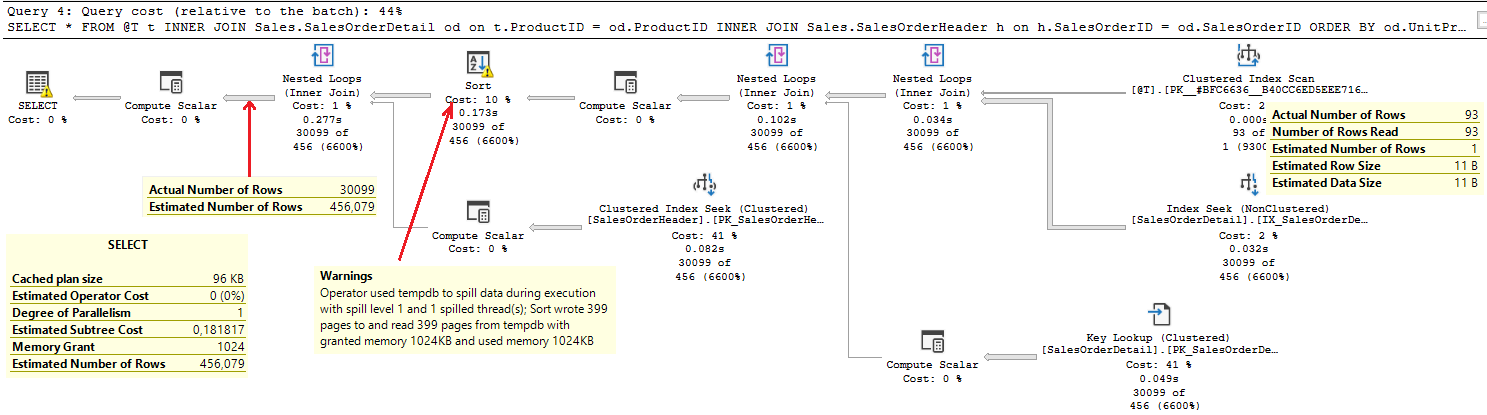

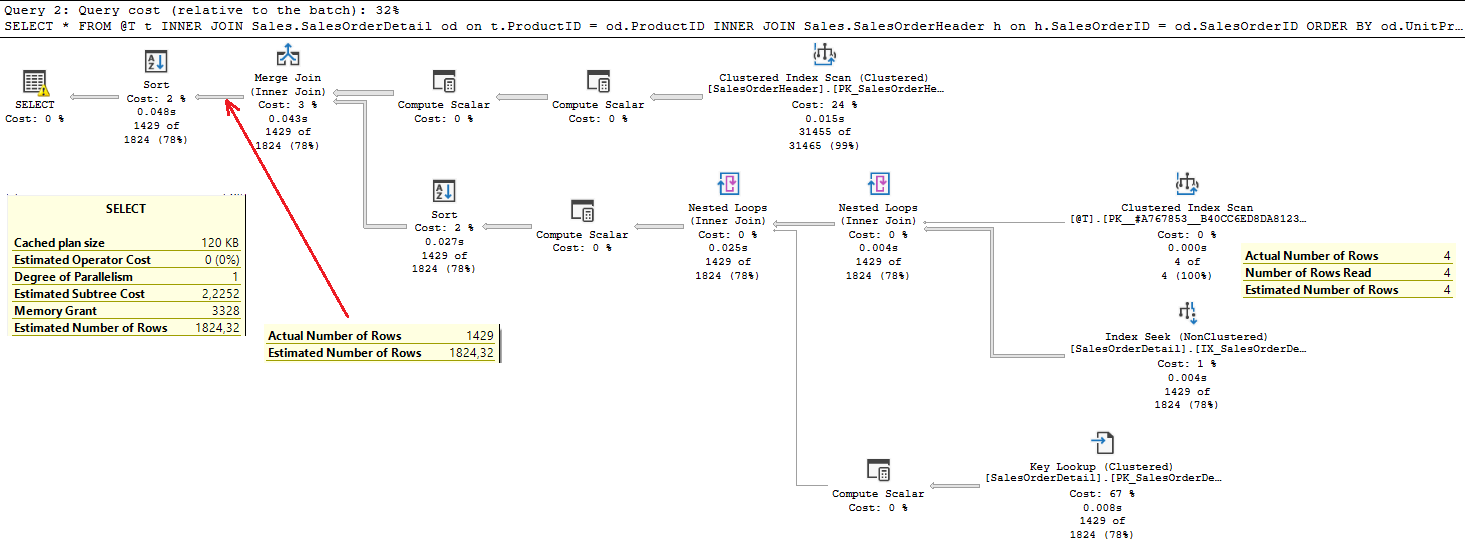

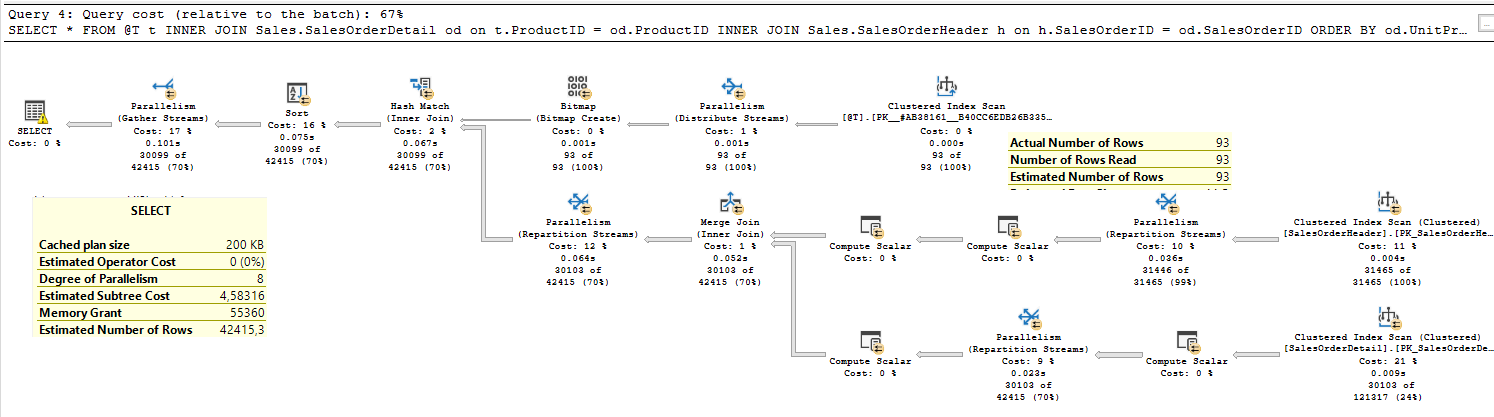

Here is the execution plan under CL 150 with a high selective parameter:

And another one, when the first invocation is with a low selective parameter:

You can see two completely different plans (different plan operators and execution parameters). The only difference between two calls is the number of rows in a table variable, therefore in SQL Server 2019 cardinality of the table variable can manage the entire execution plan. Unlike with SQL Server 2017, the execution plan depends on the parameter used for the first execution. If you used a low selective parameter for the first execution, but most of the calls are with the high selective parameters, you will not be happy with the new table variable behavior. And even if the current plan is good for your workload, there is no guarantee that after it disappear from cache the new one will be the same. With old table variable behavior the plan was stable.

Queries within stored procedures in SQL Server 2019 that use table variables will be more prone to parameter sniffing. Although these estimations will be more accurate than the ones prior to SQL Server 2019, that does not mean the execution plan will be better. They could be better then your current plan, but, as you saw in this article, they could introduce performance regression and instability.

Why then I am classifying it as a “bad” thing? Simply because I do not have significantly bad plans on the production system caused by the table variable’s cardinality. Yes, I had them initially, but I had to fix them, I could not wait until a better estimation comes. Therefore, I do not expect significant improvements in production workloads, the code can be aligned with this new behavior for future development, of course, but existing code is more-less fixed. However, some plans that are considered as stable plans due to fixed table variable’s cardinality prior to SQL Server 2019, can be now prone to parameter sniffing, and most probably, we need to fix them. Therefore, I am not so happy wit this aspect of the new table variables’s behavior.

In the next article, and the last one in this series, you’ll see another important side effect of this behavior, that can break some of performance workarounds implemented in solutions in previous SQL Server versions.

Thanks for reading.

[…] Milos Radivojevic points out the downside to table variable deferred compilation in SQL Server 2019: […]