Prior to SQL Server 2019, cardinality of a table variable was hardcoded to 1, regardless of number of rows in it. The only way to see a different (and accurate) value there is to run a statement with the OPTION (RECOMPILE). Without this option, SQL Server assumes there will be only one row in a table variable.

Prior to SQL Server 2019, cardinality of a table variable was hardcoded to 1, regardless of number of rows in it. The only way to see a different (and accurate) value there is to run a statement with the OPTION (RECOMPILE). Without this option, SQL Server assumes there will be only one row in a table variable.

SQL Server 2019 introduced a new database engine feature called Table variable deferred compilation. During optimization and initial compilation, this feature will propagate cardinality estimates that are based on actual table variable row counts. This accurate row count information will be used for optimizing downstream plan operations.

That means, there will be no more an estimation, since the actual number of rows is known. This sounds very good, because the estimation of 1 was almost always wrong and now the estimated number of rows coming from a table variable will be always correct. Therefore, in SQL Server 2019, we should expect better estimations and better plans for queries that use table variables.

Unlike temporary tables, table variables still have no statistics; this is not changed in SQL Server 2019. According to the documentation, the only change is the cardinality of the table. Use the following code to perform small test to check this:

--ensure that CL is 140

ALTER DATABASE DB2019 SET COMPATIBILITY_LEVEL = 140;

GO

DECLARE @t TABLE(id INT)

INSERT INTO @t SELECT n FROM dbo.GetNums(5000);

SELECT * FROM @t;

GO

ALTER DATABASE DB2019 SET COMPATIBILITY_LEVEL = 150;

GO

DECLARE @t TABLE(id INT)

INSERT INTO @t SELECT n FROM dbo.GetNums(5000);

SELECT * FROM @t;

GO

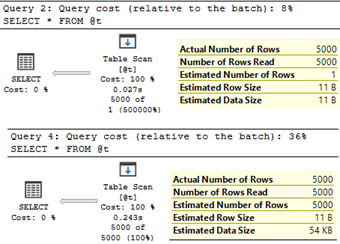

Execution plans show the change in SQL Server 2019 (under the compatibility level 150):

Let us try to find out what would be the estimation, if we would have a simple predicate in the WHERE clause, like in this query:

--ensure that CL is 140

ALTER DATABASE DB2019 SET COMPATIBILITY_LEVEL = 140;

GO

DECLARE @t TABLE(id INT)

INSERT INTO @t SELECT 1 + ABS(CHECKSUM(NEWID())) % 100 FROM dbo.GetNums(5000);

SELECT * FROM @t WHERE id = 6;

GO

ALTER DATABASE DB2019 SET COMPATIBILITY_LEVEL = 150;

GO

DECLARE @t TABLE(id INT)

INSERT INTO @t SELECT 1 + ABS(CHECKSUM(NEWID())) % 100 FROM dbo.GetNums(5000);

SELECT * FROM @t WHERE id = 6;

GO

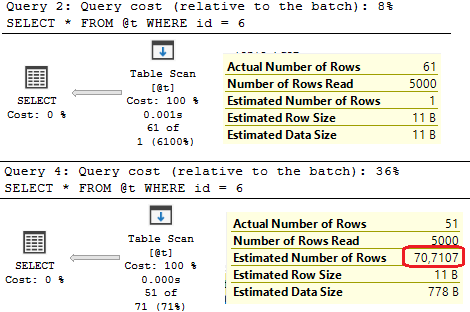

Let’s observer the execution plans and estimated values to check, whether SQL Server 2019 comes up with different estimations:

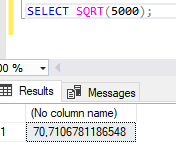

Yes, this is not 1 anymore, but some other value – 70.7107. This magic number is actually the square root of the table variable cardinality.

This is an assumption of the Cardinality Estimator from SQL Server 2014, when statistics on the predicate leading column is missing.

Just to note here that a temporal table would some with better estimation, since it has statistics (it is created on-the-fly, when you refer to the table in a statement). Here is the same code, but with a temporal table:

DROP TABLE IF EXISTS #T;

CREATE TABLE #T (id INT);

INSERT INTO #T SELECT 1 + ABS(CHECKSUM(NEWID())) % 100 FROM dbo.GetNums(5000);

SELECT * FROM #T WHERE id = 6;

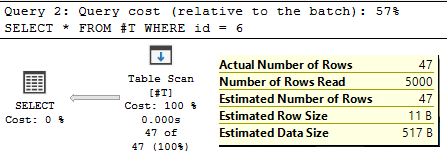

By observing the execution plan, we can see that the calculated statistics object helped SQL Server to come up even with an accurate estimation:

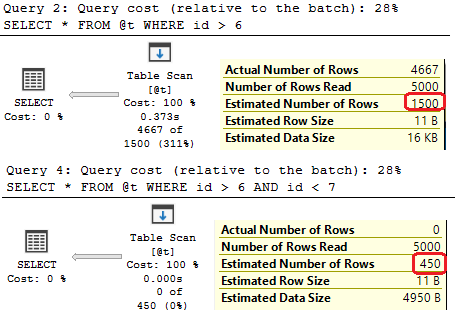

We saw that the estimated number of rows in a table variable with an equality predicate in SQL Server 2019 is equal to the square root of the table variable cardinality. In case of inequality predicates, the estimation is 30% of the table variable cardinality, while range operator or BETWEEN operator expects 9% of the total number of rows in the table variable.

The behavior of table variables is changed in SQL Server 2019. Its cardinality is not 1 anymore, but the actual number of rows, and the estimation for queries with table variables predicates has been changed too. Prior to SQL Server 2019, table variables were almost always underestimated, sometimes very significantly and were responsible for some bad execution plans. However, since this behavior was known and fix from the beginning (18 years ago!) and you knew that the estimation will be 1, it was also (mis)used to fix or workaround some issues with cardinality overestimation in complex queries. Now, this “fix” could break your “fix”.

I will cover these situations in the next article The Good, The Bad and The Ugly of Table Variable Deferred Compilation.

Thanks for reading.